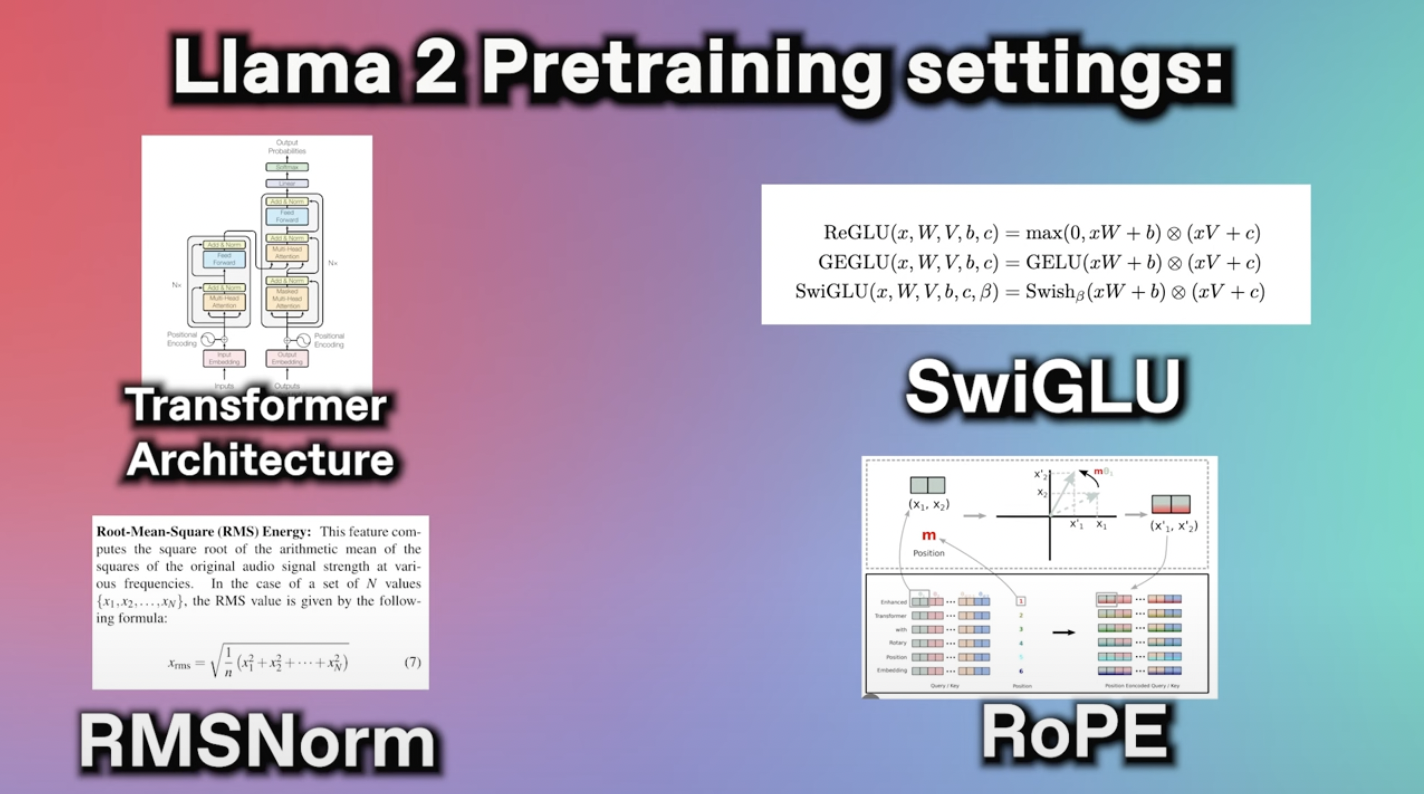

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Llama-2 much like other AI models is built on a classic Transformer Architecture To make the 2000000000000 tokens and internal weights easier to handle Meta. Feel free to follow along with the video for the full context Llama 2 Explained - Arxiv Dives w Oxenai. The Llama 2 research paper details several advantages the newer generation of AI models offers over the original LLaMa models. LLAMA 2 Full Paper Explained hu-po 318K subscribers Subscribe 2 Share 4 waiting Scheduled for Jul 19 2023 llm ai Like..

Ai Breakdown Or Takeaways From The 78 Page Llama 2 Paper Deepgram

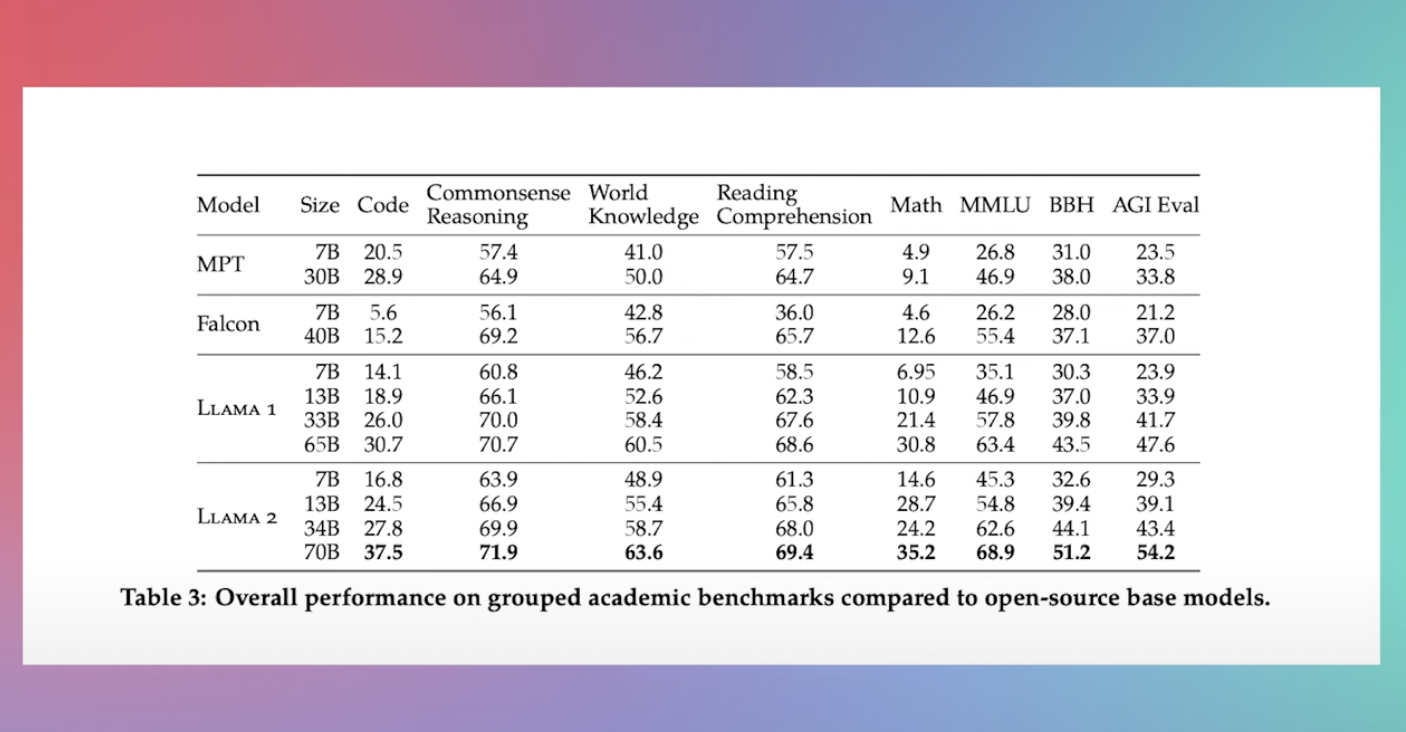

Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2. In this section we look at the tools available in the Hugging Face ecosystem to efficiently train Llama 2 on simple hardware and show how to fine-tune the 7B version of Llama 2 on a. We have collaborated with Kaggle to fully integrate Llama 2 offering pre-trained chat and CodeLlama in various sizes To download Llama 2 model artifacts from Kaggle you must first request a. Llama 2 outperforms other open source language models on many external benchmarks including reasoning coding proficiency and knowledge tests Llama 2 The next generation of our open. Llama 2 models download 7B 13B 70B Llama 2 on Azure All three Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and..

I was testing llama-2 70b q3_K_S at 32k context with the following arguments -c 32384 --rope-freq-base 80000 --rope-freq-scale 05. LLaMA-65B and 70B performs optimally when paired with a GPU that has a minimum of 40GB VRAM Suitable examples of GPUs for this. GPU vRAM minimum requirements for multiple GPUs running a 70B Llama 2 model at 4 bits - GenAI Stack Exchange. Image by the author Made with an illustration from Pixabay The largest and best model of. Llama-2 7b may work for you with 12GB VRAM You will need 20-30 gpu hours and a minimum of 50mb raw text files in high quality no page..

Ai Breakdown Or Takeaways From The 78 Page Llama 2 Paper Deepgram

Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 7B pretrained model converted for the. . Llama 2 is here - get it on Hugging Face a blog post about Llama 2 and how to use it with Transformers and PEFT LLaMA 2 - Every Resource you need a compilation of relevant resources to. 9 Model card Files Use with library Llama 2 13B ggml From. Llama 2 is being released with a very permissive community license and is available for commercial use The code pretrained models and fine-tuned models are all being..

Comments